this post was submitted on 13 Jul 2025

657 points (97.0% liked)

Comic Strips

18209 readers

1906 users here now

Comic Strips is a community for those who love comic stories.

The rules are simple:

- The post can be a single image, an image gallery, or a link to a specific comic hosted on another site (the author's website, for instance).

- The comic must be a complete story.

- If it is an external link, it must be to a specific story, not to the root of the site.

- You may post comics from others or your own.

- If you are posting a comic of your own, a maximum of one per week is allowed (I know, your comics are great, but this rule helps avoid spam).

- The comic can be in any language, but if it's not in English, OP must include an English translation in the post's 'body' field (note: you don't need to select a specific language when posting a comic).

- Politeness.

- Adult content is not allowed. This community aims to be fun for people of all ages.

Web of links

- !linuxmemes@lemmy.world: "I use Arch btw"

- !memes@lemmy.world: memes (you don't say!)

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

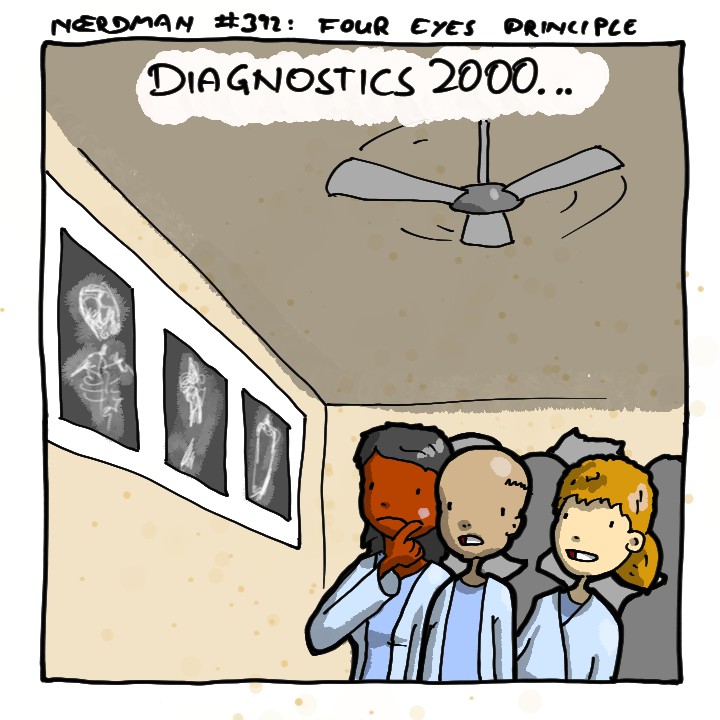

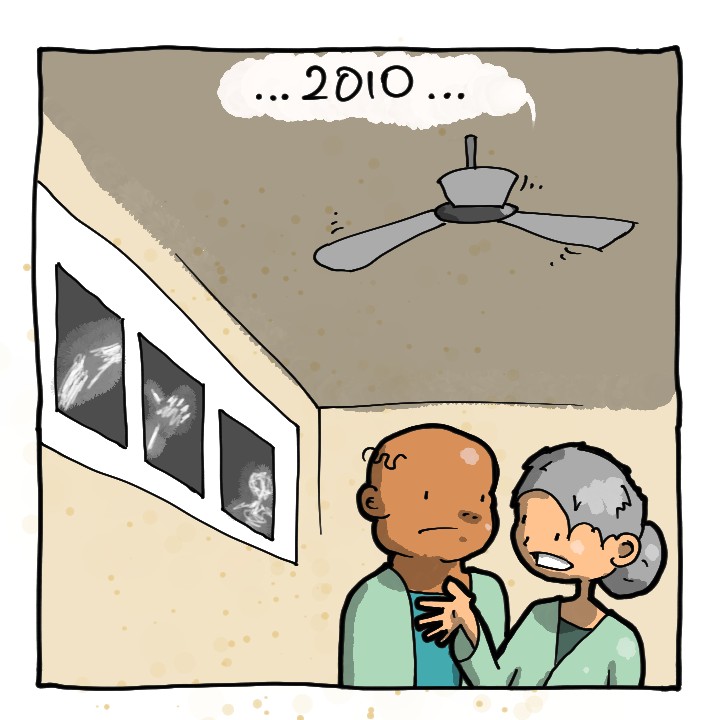

iirc the reason it isn't used still is because even with it being trained by highly skilled professionals, it had some pretty bad biases with race and gender, and was only as accurate as it was with white, male patients.

Plus the publicly released results were fairly cherry picked for their quality.

Medical sciences in general have terrible gender and racial biases. My basic understanding is that it has got better in the past 10 years or so, but past scientific literature is littered with inaccuracies that we are still going along with. I'm thinking drugs specifically, but I suspect it generalizes.

Yeah, there were also several stories where the AI just detected that all the pictures of the illness had e.g. a ruler in them, whereas the control pictures did not. It's easy to produce impressive results when your methodology sucks. And unfortunately, those results will get reported on before peer reviews are in and before others have attempted to reproduce the results.

That reminds me, pretty sure at least one of these ai medical tests it was reading metadata that included the diagnosis on the input image.