404 Media

404 Media is a new independent media company founded by technology journalists Jason Koebler, Emanuel Maiberg, Samantha Cole, and Joseph Cox.

Don't post archive.is links or full text of articles, you will receive a temp ban.

Young people have always felt misunderstood by their parents, but new research shows that Gen Alpha might also be misunderstood by AI. A research paper, written by Manisha Mehta, a soon-to-be 9th grader, and presented today at the ACM Conference on Fairness, Accountability, and Transparency in Athens, shows that Gen Alpha’s distinct mix of meme- and gaming-influenced language might be challenging automated moderation used by popular large language models.

The paper compares kid, parent, and professional moderator performance in content moderation to that of four major LLMs: OpenAI’s GPT-4, Anthropic’s Claude, Google’s Gemini, and Meta’s Llama 3. They tested how well each group and AI model understood Gen Alpha phrases, as well as how well they could recognize the context of comments and analyze potential safety risks involved.

Mehta, who will be starting 9th Grade in the fall, recruited 24 of her friends to create a dataset of 100 “Gen Alpha” phrases. This included expressions that might be mocking or encouraging depending on the context, like “let him cook” and “ate that up”, as well as expressions from gaming and social media contexts like “got ratioed”, “secure the bag”, and “sigma”.

“Our main thesis was that Gen Alpha has no reliable form of content moderation online,” Mehta told me over Zoom, using her dad’s laptop. She described herself as a definite Gen Alpha, and she met her (adult) co-author last August, who is supervising her dad’s PhD. She has seen friends experience online harassment and worries that parents aren’t aware of how young people’s communication styles open them up to risks. “And there’s a hesitancy to ask for help from their guardians because they just don’t think their parents are familiar enough [with] that culture,” she says.

Given the Gen Alpha phrases, “all non-Gen Alpha evaluators—human and AI—struggled significantly,” in the categories of “Basic Understanding” (what does a phrase mean?), “Contextual Understanding” (does it mean something different in different contexts?), and “Safety Risk” (is it toxic?). This was particularly true for “emerging expressions” like skibidiand gyatt, with phrases that can be used ironically or in different ways, or with insults hidden in innocent comments. Part of this is due to the unusually rapid speed of Gen Alpha’s language evolution; a model trained on today’s hippest lingo might be totally bogus when it’s published in six months.

In the tests, kids broadly recognized the meaning of their own generation-native phrases, scoring 98, 96, and 92 percent in each of the three categories. However, both parents and professional moderators “showed significant limitations,” according to the paper; parents scored 68, 42, and 35 percent in those categories, while professional moderators did barely any better with 72, 45, and 38 percent. The real life implications of these numbers mean that a parent might only recognize one third of the times when their child is being bullied in their instagram comments.

The four LLMs performed about the same as the parents, potentially indicating that the data used to train the models might be constructed from more “grown-up” language examples. This makes sense since pretty much all novelists are older than 15, but it also means that content-moderation AIs tasked with maintaining young people’s online safety might not be linguistically equipped for the job.

Mehta explains that Gen Alpha, born between 2010-ish and last-year-ish, are the first cohort to be born fully post-iPhone. They are spending unprecedented amounts of their early childhoods online, where their interactions can’t be effectively monitored. And, due to the massive volumes of content they produce, a lot of the moderation of the risks they face is necessarily being handed to ineffective automatic moderation tools with little parental oversight. Against a backdrop of steadily increasing exposure to online content, Gen Alpha’s unique linguistic habits pose unique challenges for safety.

From 404 Media via this RSS feed

Subscribe

Join the newsletter to get the latest updates.

SuccessGreat! Check your inbox and click the link.ErrorPlease enter a valid email address.

A federal judge in California ruled Monday that Anthropic likely violated copyright law when it pirated authors’ books to create a giant dataset and "forever" library but that training its AI on those books without authors' permission constitutes transformative fair use under copyright law. The complex decision is one of the first of its kind in a series of high-profile copyright lawsuits brought by authors and artists against AI companies, and it’s largely a very bad decision for authors, artists, writers, and web developers.

This case, in which authors Andrea Bartz, Charles Graeber, and Kirk Wallace Johnson sued Anthropic, maker of the Claude family of large language models, is one of dozens of high-profile lawsuits brought against AI giants. The authors sued Anthropic because the company scraped full copies of their books for the purposes of training their AI models from a now-notorious dataset called Books3, as well as from the piracy websites LibGen and Pirate Library Mirror (PiLiMi). The suit also claims that Anthropic bought used physical copies of books and scanned them for the purposes of training AI.

"From the start, Anthropic ‘had many places from which’ it could have purchased books, but it preferred to steal them to avoid ‘legal/practice/business slog,’ as cofounder and chief executive officer Dario Amodei put it. So, in January or February 2021, another Anthropic cofounder, Ben Mann, downloaded Books3, an online library of 196,640 books that he knew had been assembled from unauthorized copies of copyrighted books — that is, pirated," William Alsup, a federal judge for the Northern District of California, wrote in his decision Monday. "Anthropic’s next pirated acquisitions involved downloading distributed, reshared copies of other pirate libraries. In June 2021, Mann downloaded in this way at least five million copies of books from Library Genesis, or LibGen, which he knew had been pirated. And, in July 2022, Anthropic likewise downloaded at least two million copies of books from the Pirate Library Mirror, or PiLiMi, which Anthropic knew had been pirated."

From 404 Media via this RSS feed

Fansly, a popular platform where independent creators—many of whom are making adult content—sell access to images and videos to subscribers and fans, announced sweeping changes to its terms of service on Monday, including effectively banning furries.

The changes blame payment processors for classifying “some anthropomorphic content as simulated bestiality.” Most people in the furry fandom condemn bestiality and anything resembling it, but payment processors—which have increasingly dictated strict rules for adult sexual content for years—seemingly don’t know the difference and are making it creators’ problem.

The changes include new policies that ban chatbots or image generators that respond to user prompts, content featuring alcohol, cannabis or “other intoxicating substances,” and selling access to Snapchat content or other social media platforms if it violates their terms of service.

Under a section called “policy clarifications” in an email announcing the changes, Fansly wrote:

“Anthropomorphic Content - Our payment processing partners classify some anthropomorphic content as simulated bestiality. As a general guideline, Kemonomimi (human-like characters with animal ears/tails) is permitted, but full fursonas, Kemono, and scalie content are prohibited in adult contexts.

Hypnosis/Mind Control - This prohibition includes all synonymous terms (mesmerize, mindfuck, mindcontrol, hypno) regardless of context.

Wrestling Content - Professional, studio-produced adult wrestling is permitted. Amateur or party wrestling content is prohibited as it may be interpreted as non-consensual activity. Even when consensual, content that simulates force, struggle, or non-consent violates our policies.

Public Spaces & Recording Locations - Sexual activity is prohibited in all public spaces. Nudity is prohibited in public spaces where it's not legally permitted. Both nudity and sexual activity are prohibited on third-party private property without owner permission or in any location visible to the public.”

If creators fall into “one of these impacted niches,” Fansly wrote in the email, they’ll need to review and remove any content that’s against the updated terms of service by June 28. “We'll conduct reviews after June 28th to ensure platform-wide compliance,” the platform said.

Furries that use fursuits in content are popular on Fansly, as are Vtubers—people who use a virtual avatar, often controlled by motion tracking software—that use furry or anthropomorphic cosplay.

“I will have to spend a few hundred dollars as a Vtuber to go and completely REPLACE my model,” Amity, a Vtuber creator on Fansly, told me. She’ll have to remove all her existing furry content, too.

“Fansly is my main source of income. It is how I support myself,” Amity said. “And this screws me and several other content creators over in a bad way. Fansly has been a safe haven for furries and people that aren’t allowed to use Onlyfans or other such sites. Accepting us with open arms, until now...”

“The majority of the blame for this change lies with the payment processors and I wish we had one that didn’t try to police adult content like current processors do,” TyTyVR, another Fansly creator, told me. “However, I cannot help but feel like we’ve been betrayed by Fansly. They would rather drop their creators than let go of the payment processors that are dictating what can and can’t be posted.”

In 2021, OnlyFans announced that it would ban “sexually-explicit conduct” from the site, citing payment processor pressure. It reversed the decision days later, after widespread public backlash. Fansly said at the time that it was receiving “4,000 applications an hour” from creators looking to move to the site in the days after OnlyFans said it was banning sexually-explicit content.

“Thank you, we won't let you down,” Fansly wrote on Twitter.

Last year, Stripe dropped Wishtender, a platform that allows people to make wishlists and send gifts anonymously.

And in September 2024, Patreon made changes to its content guidelines that “added nuance under ‘Bestiality’ to clarify the circumstances in which it is permitted for human characters to have sexual interactions with fictional mythological creatures,” clarifying that “sexual interaction between a human and a fictional mythological creature that is more humanistic than animal (i.e. anthropomorphic, bipedal, and/or sapient)” is forbidden but portrayals of sex outside of those guidelines was allowed.

Fansly did not respond to a request for comment.

From 404 Media via this RSS feed

A new site, FuckLAPD.com, is using public records and facial recognition technology to allow anyone to identify police officers in Los Angeles they have a picture of. The tool, made by artist Kyle McDonald, is designed to help people identify cops who may otherwise try to conceal their identity, such as covering their badge or serial number.

“We deserve to know who is shooting us in the face even when they have their badge covered up,” McDonald told me when I asked if the site was made in response to police violence during the LA protests against ICE that started earlier this month. “fucklapd.com is a response to the violence of the LAPD during the recent protests against the horrific ICE raids. And more broadly—the failure of the LAPD to accomplish anything useful with over $2B in funding each year.”

“Cops covering up their badges? ID them with their faces instead,” the site, which McDonald said went live this Saturday. The tool allows users to upload an image of a police officer’s face to search over 9,000 LAPD headshots obtained via public record requests. The site says image processing happens on the device, and no photos or data are transmitted or saved on the site. “Blurry, low-resolution photos will not match,” the site says.

From 404 Media via this RSS feed

Unless you’re living in a ChatGPT hype-bro bubble, it’s a pretty common sentiment these days that the internet is getting shittier. Social media algorithms have broken our brains, AI slop flows freely through Google search results like raw sewage, and tech companies keep telling us that this new status quo is not only inevitable, but Good.

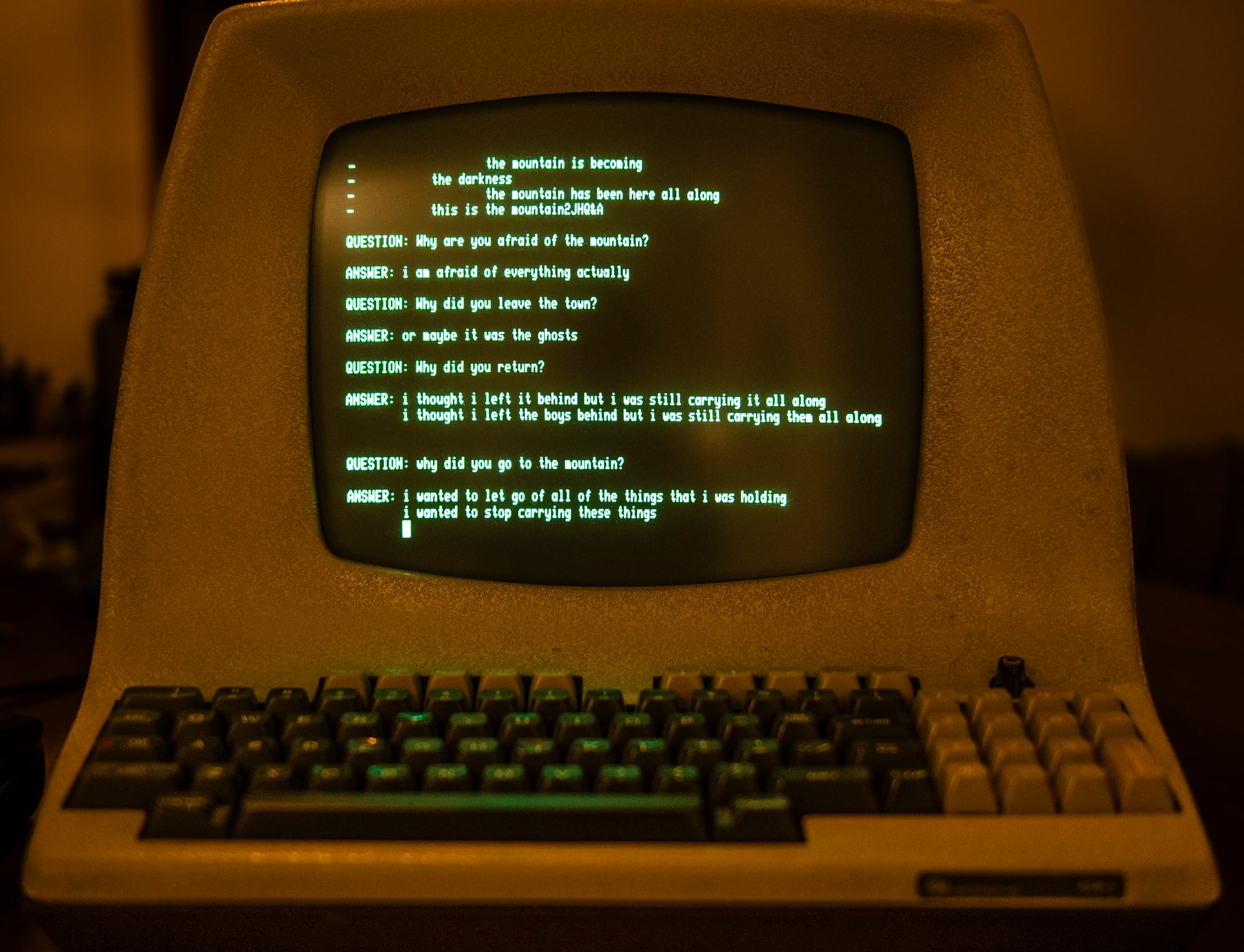

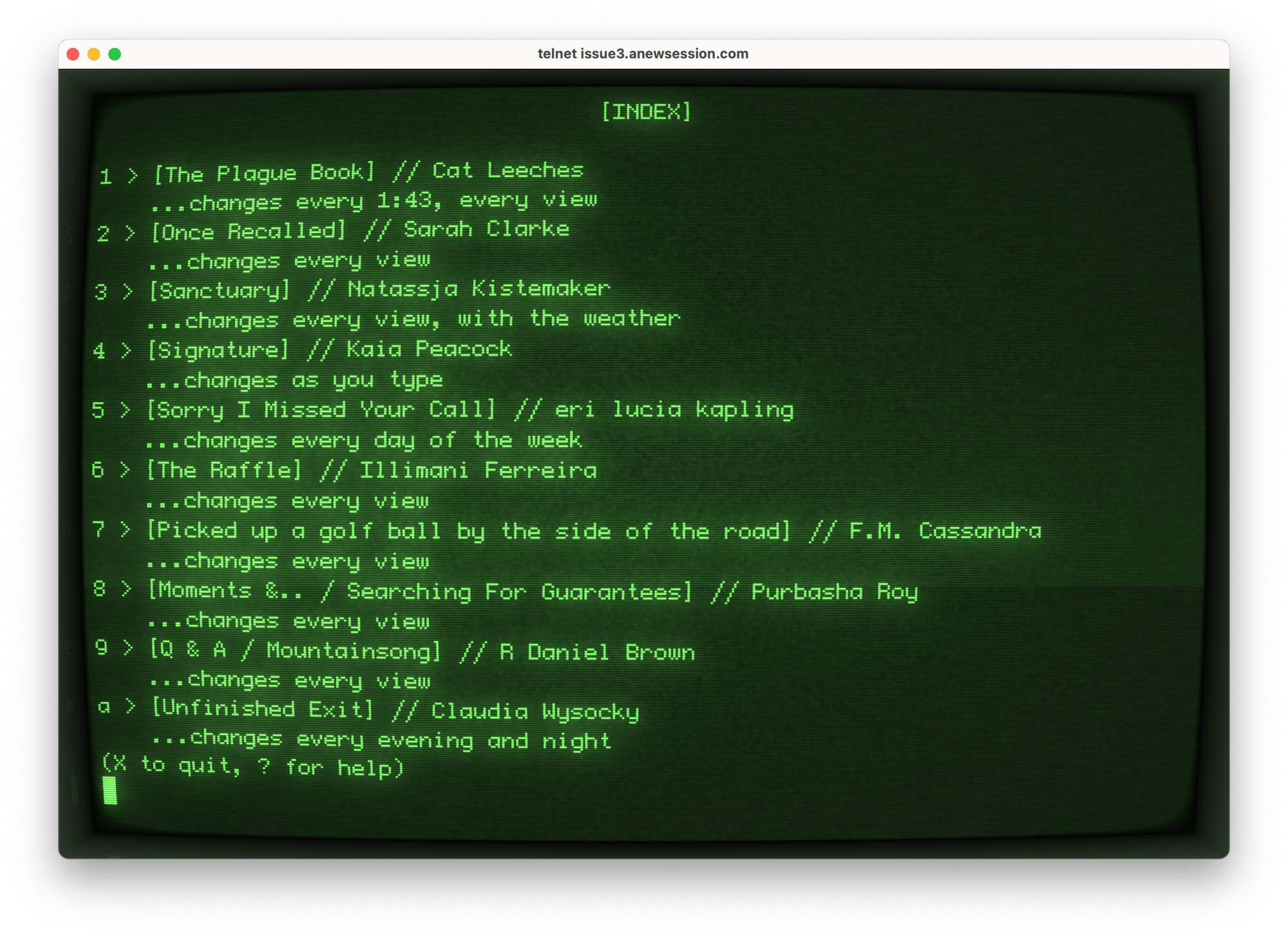

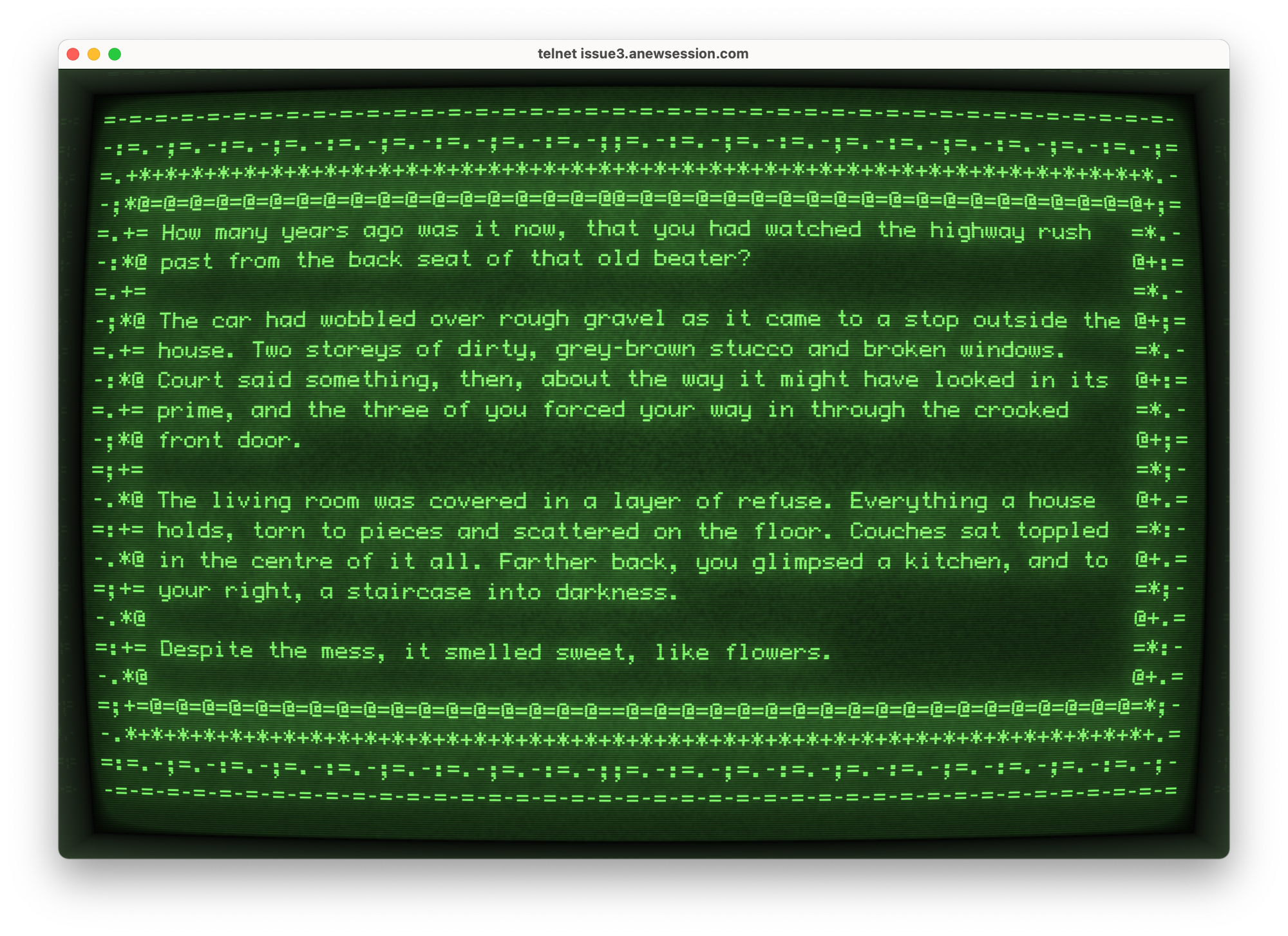

Standing in stark opposition to these trends is New Session, an online literary zine accessed via the ancient-but-still-functional internet protocol Telnet.

Like any other zine, New Session features user-submitted poems, essays, and other text-based art. But the philosophy behind each of its digital pages is anything but orthodox.

“In the face of right-wing politics, climate change, a forever pandemic, and the ever-present hunger of imperialist capitalism, we have all been forced to adapt,” reads the intro to New Session’s third issue, titled Adaptations, which was released earlier this month. “Both you and this issue will change with each viewing. Select a story by pressing the key associated with it in the index. Read it again. Come back to it tomorrow. Is it the same? Are you?”

The digital zine is accessible on the web via a browser-based Telnet client, or if you’re a purist like me, via the command line. As the intro promises, each text piece changes—adapts—depending on various conditions, like what time of day you access it or how many times you’ve viewed it. Some pieces change every few minutes, while others update every time a user looks at it, like gazing at fish inside a digital aquarium.

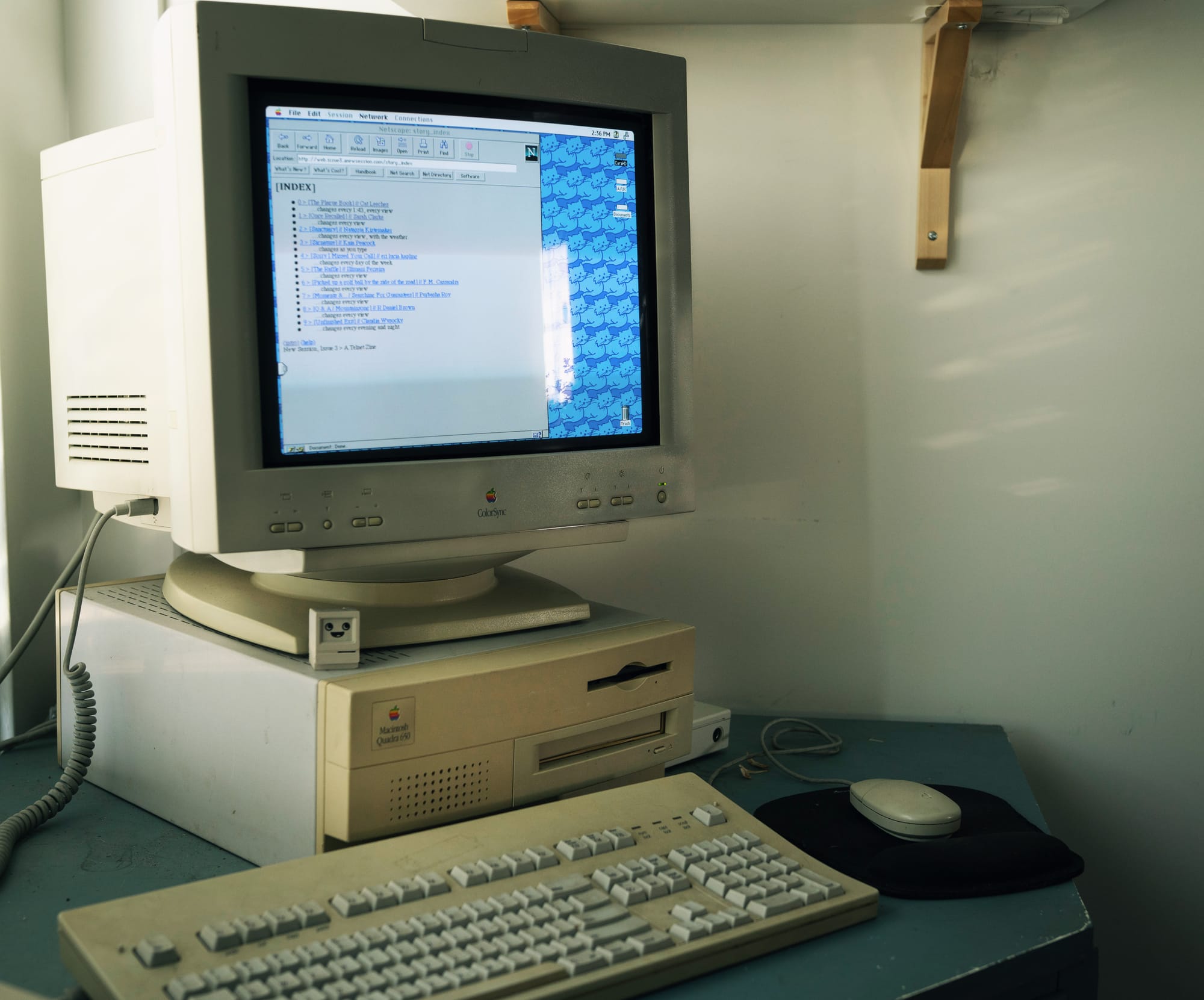

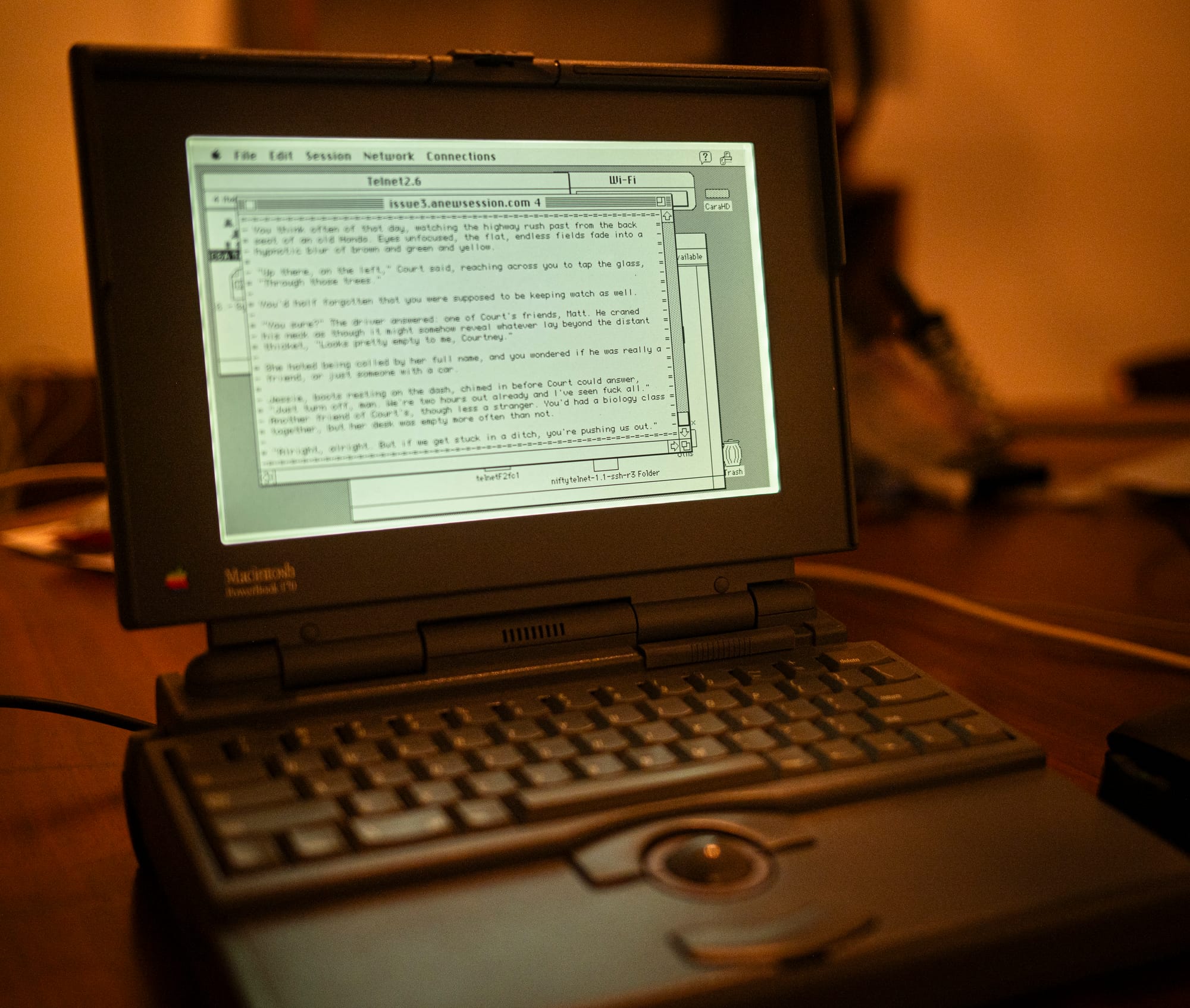

How New Session looks on Telnet. Images courtesy Cara Esten Hurtle

Once logged in, the zine’s main menu lists each piece along with the conditions that cause it to change. For example, Natasja Kisstemaker’s “Sanctuary” changes with every viewing, based on the current weather. “Signature,” by Kaia Peacock, updates every time you press a key, slowly revealing more of the piece when you type a letter contained in the text—like a word puzzle on Wheel of Fortune.

Cara Esten Hurtle, an artist and software engineer based in the Bay Area, co-founded New Session in 2021 along with Lo Ferris, while searching for something to do with her collection of retro computers during the early days of the COVID-19 pandemic.

“I realized I’d been carrying around a lot of old computers, and I thought it would be cool to be able to do modern stuff on these things,” Hurtle told 404 Media. “I wanted to make something that was broadly usable across every computer that had ever been made. I wanted to be like, yeah, you can run this on a 1991 Thinkpad someone threw away, or you could run it on your modern laptop.”

If you’re of a certain age, you might remember Telnet as a server-based successor to BBS message boards, the latter of which operated by connecting computers directly. It hearkens back to a slower internet age, where you’d log in maybe once or twice a day to read what’s new. Technically, Telnet predates the internet itself, originally developed as a networked teletype system in the late ‘60s for the internet’s military precursor, the ARPAnet. Years later, it was officially adopted as one of the earliest internet protocols, and today it remains the oldest application protocol still in use—though mainly by enthusiasts like Hurtle.

New Session intentionally embraces this slower pace, making it more like light-interactive fiction than a computer game. For Hurtle, the project isn’t just retro novelty—it’s a radical rejection of the addictive social media and algorithmic attention-mining that have defined the modern day internet.

New Session viewed on a variety of Hurtle's collection of machines. Photos courtesy Cara Esten Hurtle

“I want it to be something where you don’t necessarily feel like you have to spend a ton of time with it,” said Hurtle. “I want people to come back to it because they’re interested in the stories in the same way you’d come back to a book—not to get your streak on Duolingo.”

I won’t go into too much detail, because discovering how the pieces change is kind of the whole point. But on the whole, reading New Session feels akin to a palette cleanser after a long TikTok binge. Its very design evokes the polar opposite of the hyper-consumerist mindset that brought us infinite scrolls and algorithmic surveillance. The fact that you literally can’t consume it all in one session forces readers to engage with the material more slowly and meaningfully, piquing curiosity and exercising intuition.

At the same time, the zine isn’t meant to be a nostalgic throwback to simpler times. New Session specifically solicits works from queer and trans writers and artists, as a way to reclaim a part of internet history that was credited almost entirely to white straight men. But Hurtle says revisiting things like Telnet can also be a way to explore paths not taken, and re-assess ideas that were left in the dustbin of history.

“You have to avoid the temptation to nostalgize, because that’s really dangerous and it just turns you into a conservative boomer,” laughs Hurtle. “But we can imagine what aspects of this we can take and claim for our own. We can use it as a window to understand what’s broken about the current state of the internet. You just can’t retreat to it.”

Projects like New Session make a lot of sense in a time when more people are looking backward to earlier iterations of the internet—not to see where it all went wrong, but to excavate old ideas that could have shaped it in a radically different way, and perhaps still can. It’s a reminder of that hidden, universal truth—to paraphrase the famous David Graeber quote—that the internet is a thing we make, and could just as easily make differently.

From 404 Media via this RSS feed

A new paper from researchers at Stanford, Cornell, and West Virginia University seems to show that one version of Meta’s flagship AI model, Llama 3.1, has memorized almost the whole of the first Harry Potter book. This finding could have far-reaching copyright implications for the AI industry and impact authors and creatives who are already part of class-action lawsuits against Meta.

Researchers tested a bunch of different widely-available free large language models to see what percentage of 56 different books they could reproduce. The researchers fed the models hundreds of short text snippets from those books and measured how well it could recite the next lines. The titles were a random sampling of popular, lesser-known, and public domain works drawn from the now-defunct and controversial Books3 dataset that Meta used to train its models, as well as books by plaintiffs in the recent, and ongoing, Kadrey vs Meta class-action lawsuit.

According to Mark A. Lemley, one of the study authors, this finding might have some interesting implications. AI companies argue that their models are generative—as in, they make new stuff, rather than just being fancy search engines. On the other hand, authors and news outlets are suing on the basis that AI is just remixing existing material, including copyrighted content. “I think what we show in the paper is that neither of those characterizations is accurate,” says Lemley.

The paper shows that the capacity of Meta’s popular Llama 3.1 70B to recite passages from The Sorcerer’s Stone and *1984—*among other books—is way higher than could happen by chance. This could indicate that LLMs are not just trained using books, but might actually be storing entire copies of the books themselves. That might mean that under copyright law that the model is less “inspired by” and more “a bootleg copy of” certain texts.

It’s hard to prove that a model has “memorized” something, because it’s hard to see inside. But LLMs are trained using the mathematical relationships between little chunks of data called ‘tokens,’ like words or punctuation. Tokens all have varying probabilities of following each other or getting strung together in a specific order.

The researchers were able to extract sections of various books by repeatedly prompting the models with selected lines. They split each book into 100-token overlapping strings, then presented the model with the first 50-token half and measured how well it could produce the second. This might take a few tries, but ultimately the study was able to reproduce 91 percent of The Sorcerer’s Stone with this method.

“There’s no way, it’s really improbable, that it can get the next 50 words right if it hadn’t memorized it,” James Grimmelmann, Tessler Family Professor of Digital and Information Law at Cornell, who has worked to define “memorization” in this space, told 404 Media.

OpenAI has called memorization “a rare failure of the learning process,” and says that it sometimes happens when the topic in question appears many times in training data. It also says that intentionally getting their LLMs to spit out memorized data “is not an appropriate use of our technology and is against our terms of use.”

The study’s authors say in their paper that if the model is storing a book in its memory, the model itself couldbe considered to literally “be” a copy of the book. If that’s the case, then distributing the LLM at all might be legally equivalent to bootlegging a DVD. And this could mean that a court could order the destruction of the model itself, in the same way they’ve ordered the destruction of a cache of boxsets of pirated films. This has never happened in the AI space, and might not be possible, given how widespread these models are. Meta doesn’t release usage statistics of its different LLMs, but 3.1 70B is one of its most popular. The Stanford paper estimates that the Llama 3.1 70B model has been downloaded a million times since its release, so, technically, Meta could have accidentally distributed a million pirate versions of The Sorcerer’s Stone.

The paper found that different Llama models had memorized widely varying amounts of the tested books. “There are lots of books for which it has essentially nothing,” said Lerney. Some models were amazing at regurgitating, and others weren’t, meaning that it was more likely that the specific choices made in training the 3.1 70B version had led to memorization, the researchers said. That could be as simple as the choice not to remove duplicated training data, or the fact that Harry Potter and 1984 are pretty popular books online. For comparison, the researchers found that the Game of Thrones books were highly memorized, but Twilight books weren’t memorized at all.

Grimmelman said he believes their findings might also be good news overall for those seeking to regulate AI companies. If courts rule against allowing extensive memorization, “then you could give better legal treatment to companies that have mitigated or prevented it than the companies that didn't,” he said. “You could just say, if you memorize more than this much of a book, we'll consider that infringement. It's up to you to figure out how to make sure your models don't memorize more than that.”

From 404 Media via this RSS feed

The FOIA Forum is a livestreamed event for paying subscribers where we talk about how to file public records requests and answer questions. If you're not already signed up, please consider doing so here.

Recently we had a FOIA Forum where we focused on our article This ‘College Protester’ Isn’t Real. It’s an AI-Powered Undercover Bot for Cops.

From 404 Media via this RSS feed

A massive data center for Meta’s AI will likely lead to rate hikes for Louisiana customers, but Meta wants to keep the details under wraps.

Holly Ridge is a rural community bisected by US Highway 80, gridded with farmland, with a big creek—it is literally named Big Creek—running through it. It is home to rice and grain mills and an elementary school and a few houses. Soon, it will also be home to Meta’s massive, 4 million square foot AI data center hosting thousands of perpetually humming servers that require billions of watts of energy to power. And that energy-guzzling infrastructure will be partially paid for by Louisiana residents.

The plan is part of what Meta CEO Mark Zuckerberg said would be “a defining year for AI.” On Threads, Zuckerberg boasted that his company was “building a 2GW+ datacenter that is so large it would cover a significant part of Manhattan,” posting a map of Manhattan along with the data center overlaid. Zuckerberg went on to say that over the coming years, AI “will drive our core products and business, unlock historic innovation, and extend American technology leadership. Let's go build! 💪”

What Zuckerberg did not mention is that "Let's go build" refers not only to the massive data center but also three new Meta-subsidized, gas power plants and a transmission line to fuel it serviced by Entergy Louisiana, the region’s energy monopoly.

Key details about Meta’s investments with the data center remain vague, and Meta’s contracts with Entergy are largely cloaked from public scrutiny. But what is known is the $10 billion data center has been positioned as an enormous economic boon for the area—one that politicians bent over backward to facilitate—and Meta said it will invest $200 million into “local roads and water infrastructure.”

A January report from NOLA.com said that the the state had rewritten zoning laws, promised to change a law so that it no longer had to put state property up for public bidding, and rewrote what was supposed to be a tax incentive for broadband internet meant to bridge the digital divide so that it was only an incentive for data centers, all with the goal of luring in Meta.

But Entergy Louisiana’s residential customers, who live in one of the poorest regions of the state, will see their utility bills increase to pay for Meta’s energy infrastructure, according to Entergy’s application. Entergy estimates that amount will be small and will only cover a transmission line, but advocates for energy affordability say the costs could balloon depending on whether Meta agrees to finish paying for its three gas plants 15 years from now. The short-term rate increases will be debated in a public hearing before state regulators that has not yet been scheduled.

The Alliance for Affordable Energy called it a “black hole of energy use,” and said “to give perspective on how much electricity the Meta project will use: Meta’s energy needs are roughly 2.3x the power needs of Orleans Parish … it’s like building the power impact of a large city overnight in the middle of nowhere.”

404 Media reached out to Entergy for comment but did not receive a response.

By 2030, Entergy’s electricity prices are projected to increase 90 percent from where they were in 2018, although the company attributes much of that to damage to infrastructure from hurricanes. The state already has a high energy cost burden in part because of a storm damage to infrastructure, and balmy heat made worse by climate change that drives air conditioner use. The state's homes largely are not energy efficient, with many porous older buildings that don’t retain heat in the winter or remain cool in the summer.

“You don't just have high utility bills, you also have high repair costs, you have high insurance premiums, and it all contributes to housing insecurity,” said Andreanecia Morris, a member of Housing Louisiana, which is opposed to Entergy’s gas plant application. She believes Meta’s data center will make it worse. And Louisiana residents have reasons to distrust Entergy when it comes to passing off costs of new infrastructure: in 2018, the company’s New Orleans subsidiary was caught paying actors to testify on behalf of a new gas plant. “The fees for the gas plant have all been borne by the people of New Orleans,” Morris said.

In its application to build new gas plants and in public testimony, Entergy says the cost of Meta’s data center to customers will be minimal and has even suggested Meta’s presence will make their bills go down. But Meta’s commitments are temporary, many of Meta’s assurances are not binding, and crucial details about its deal with Entergy are shielded from public view, a structural issue with state energy regulators across the country.

AI data centers are being approved at a breakneck pace across the country, particularly in poorer regions where they are pitched as economic development projects to boost property tax receipts, bring in jobs and where they’re offered sizable tax breaks. Data centers typically don’t hire many people, though, with most jobs in security and janitorial work, along with temporary construction work. And the costs to the utility’s other customers can remain hidden because of a lack of scrutiny and the limited power of state energy regulators. Many data centers—like the one Meta is building in Holly Ridge—are being powered by fossil fuels. This has led to respiratory illness and other health risks and emitting greenhouse gasses that fuel climate change. In Memphis, a massive data center built to launch a chatbot for Elon Musks’ AI company is powered by smog-spewing methane turbines, in a region that leads the state for asthma rates.

“In terms of how big these new loads are, it's pretty astounding and kind of a new ball game,” said Paul Arbaje, an energy analyst with the Union of Concerned Scientists, which is opposing Entergy’s proposal to build three new gas-powered plants in Louisiana to power Meta’s data center.

Entergy Louisiana submitted a request to the state’s regulatory body to approve the construction of the new gas-powered plants that would create 2.3 gigawatts of power and cost $3.2 billion in the 1440 acre Franklin Farms megasite in Holly Ridge, an unincorporated community of Richland Parish. It is the first big data center announced since Louisiana passed large tax breaks for data centers last summer.

In its application to the public utility commission for gas plants, Entergy says that Meta has a planned investment of $5 billion in the region to build the gas plants in Richland Parish, Louisiana, where it claims in its application that the data center will employ 300-500 people with an average salary of $82,000 in what it points out is “a region of the state that has long struggled with a lack of economic development and high levels of poverty.” Meta’s official projection is that it will employ more than 500 people once the data center is operational. Entergy plans for the gas plants to be online by December 2028.

In testimony, Entergy officials refused to answer specific questions about job numbers, saying that the numbers are projections based on public statements from Meta.

A spokesperson for Louisiana’s Economic Development told 404 Media in an email that Meta “is contractually obligated to employ at least 500 full-time employees in order to receive incentive benefits.”

When asked about jobs, Meta pointed to a public facing list of its data centers, many of which the company says employ more than 300 people. A spokesperson said that the projections for the Richland Parish site are based on the scale of the 4 million square foot data center. The spokesperson said the jobs will include “engineering and other technical positions to operational roles and our onsite culinary staff.”

When asked if its job commitments are binding, the spokesperson declined to answer, saying, “We worked closely with Richland Parish and Louisiana Economic Development on mutually beneficial agreements that will support long-term growth in the area.”

Others are not as convinced. “Show me a data center that has that level of employment,” says Logan Burke, executive director of the Alliance for Affordable Energy in Louisiana.

Entergy has argued the new power plants are necessary to satiate the energy need from Meta’s massive hyperscale data center, which will be Meta’s largest data center and potentially the largest data center in the United States. It amounts to a 25 percent increase in Entergy Louisiana’s current load, according to the Alliance for Affordable Energy.

Entergy requested an exemption from a state law meant to ensure that it develops energy at the lowest cost by issuing a public request for proposals, claiming in its application and testimony that this would slow them down and cause them to lose their contracts with Meta.

Meta has agreed to subsidize the first 15 years of payments for construction of the gas plants, but the plant’s construction is being financed over 30 years. At the 15 year mark, its contract with Entergy ends. At that point, Meta may decide it doesn’t need three gas plants worth of energy because computing power has become more efficient or because its AI products are not profitable enough. Louisiana residents would be stuck with the remaining bill.

“It's not that they're paying the cost, they're just paying the mortgage for the time that they're under contract,” explained Devi Glick, an electric utility analyst with Synapse Energy.

When asked about the costs for the gas plants, a Meta spokesperson said, “Meta works with our utility partners to ensure we pay for the full costs of the energy service to our data centers.” The spokesperson said that any rate increases will be reviewed by the Louisiana Public Service Commission. These applications, called rate cases, are typically submitted by energy companies based on a broad projection of new infrastructure projects and energy needs.

Meta has technically not finalized its agreement with Entergy but Glick believes the company has already invested enough in the endeavor that it is unlikely to pull out now. Other companies have been reconsidering their gamble on AI data centers: Microsoft reversed course on centers requiring a combined 2 gigawatts of energy in the U.S. and Europe. Meta swept in to take on some of the leases, according to Bloomberg.

And in the short-term, Entergy is asking residential customers to help pay for a new transmission line for the gas plants at a cost of more than $500 million, according to Entergy’s application to Louisiana’s public utility board. In its application, the energy giant said customers’ bills will only rise by $1.66 a month to offset the costs of the transmission lines. Meta, for its part, said it will pay up to $1 million a year into a fund for low-income customers. When asked about the costs of the new transmission line, a Meta spokesperson said, “Like all other new customers joining the transmission system, one of the required transmission upgrades will provide significant benefits to the broader transmission system. This transmission upgrade is further in distance from the data center, so it was not wholly assigned to Meta.”

When Entergy was questioned in public testimony on whether the new transmission line would need to be built even without Meta’s massive data center, the company declined to answer, saying the question was hypothetical.

Some details of Meta’s contract with Entergy have been made available to groups legally intervening in Entergy’s application, meaning that they can submit testimony or request data from the company. These parties include the Alliance for Affordable Energy, the Sierra Club and the Union of Concerned Scientists.

But Meta—which will become Entergy’s largest customer by far and whose presence will impact the entire energy grid—is not required to answer questions or divulge any information to the energy board or any other parties. The Alliance for Affordable Energy and Union of Concerned Scientists attempted to make Meta a party to Entergy’s application—which would have required it to share information and submit to questioning—but a judge denied that motion on April 4.

The public utility commissions that approve energy infrastructure in most states are the main democratic lever to assure that data centers don’t negatively impact consumers. But they have no oversight over the tech companies running the data centers or the private companies that build the centers, leaving residential customers, consumer advocates and environmentalists in the dark. This is because they approve the power plants that fuel the data centers but do not have jurisdiction over the data centers themselves.

“This is kind of a relic of the past where there might be some energy service agreement between some large customer and the utility company, but it wouldn't require a whole new energy facility,” Arbaje said.

A research paper by Ari Peskoe and Eliza Martin published in March looked at 50 regulatory cases involving data centers, and found that tech companies were pushing some of the costs onto utility customers through secret contracts with the utilities. The paper found that utilities were often parroting rhetoric from AI boosting politicians—including President Biden—to suggest that pushing through permitting for AI data center infrastructure is a matter of national importance.

“The implication is that there’s no time to act differently,” the authors wrote.

In written testimony sent to the public service commission, Entergy CEO Phillip May argued that the company had to bypass a legally required request for proposals and requirement to find the cheapest energy sources for the sake of winning over Meta.

“If a prospective customer is choosing between two locations, and if that customer believes that location A can more quickly bring the facility online than location B, that customer is more likely to choose to build at location A,” he wrote.

Entergy also argues that building new gas plants will in fact lower electricity bills because Meta, as the largest customer for the gas plants, will pay a disproportionate share of energy costs. Naturally, some are skeptical that Entergy would overcharge what will be by far their largest customer to subsidize their residential customers. “They haven't shown any numbers to show how that's possible,” Burke says of this claim. Meta didn’t have a response to this specific claim when asked by 404 Media.

Some details, like how much energy Meta will really need, the details of its hiring in the area and its commitment to renewables are still cloaked in mystery.

“We can't ask discovery. We can't depose. There's no way for us to understand the agreement between them without [Meta] being at the table,” Burke said.

It’s not just Entergy. Big energy companies in other states are also pushing out costly fossil fuel infrastructure to court data centers and pushing costs onto captive residents. In Kentucky, the energy company that serves the Louisville area is proposing 2 new gas plants for hypothetical data centers that have yet to be contracted by any tech company. The company, PPL Electric Utilities, is also planning to offload the cost of new energy supply onto its residential customers just to become more competitive for data centers.

“It's one thing if rates go up so that customers can get increased reliability or better service, but customers shouldn't be on the hook to pay for new power plants to power data centers,” Cara Cooper, a coordinator with Kentuckians for Energy Democracy, which has intervened on an application for new gas plants there.

These rate increases don’t take into account the downstream effects on energy; as the supply of materials and fuel are inevitably usurped by large data center load, the cost of energy goes up to compensate, with everyday customers footing the bill, according to Glick with Synapse.

Glick says Entergy’s gas plants may not even be enough to satisfy the energy needs of Meta’s massive data center. In written testimony, Glick said that Entergy will have to either contract with a third party for more energy or build even more plants down the line to fuel Meta’s massive data center.

To fill the gap, Entergy has not ruled out lengthening the life of some of its coal plants, which it had planned to close in the next few years. The company already pushed back the deactivation date of one of its coal plants from 2028 to 2030.

The increased demand for gas power for data centers has already created a widely-reported bottleneck for gas turbines, the majority of which are built by 3 companies. One of those companies, Siemens Energy, told Politico that turbines are “selling faster than they can increase manufacturing capacity,” which the company attributed to data centers.

Most of the organizations concerned about the situation in Louisiana view Meta’s massive data center as inevitable and are trying to soften its impact by getting Entergy to utilize more renewables and make more concrete economic development promises.

Andreanecia Morris, with Housing Louisiana, believes the lack of transparency from public utility commissions is a bigger problem than just Meta. “Simply making Meta go away, isn't the point,” Morris says. “The point has to be that the Public Service Commission is held accountable.”

Burke says Entergy owns less than 200 megawatts of renewable energy in Louisiana, a fraction of the fossil fuels it is proposing to fuel Meta’s center. Entergy was approved by Louisiana’s public utility commission to build out three gigawatts of solar energy last year , but has yet to build any of it.

“They're saying one thing, but they're really putting all of their energy into the other,” Burke says.

New gas plants are hugely troubling for the climate. But ironically, advocates for affordable energy are equally concerned that the plants will lie around disused - with Louisiana residents stuck with the financing for their construction and upkeep. Generative AI has yet to prove its profitability and the computing heavy strategy of American tech companies may prove unnecessary given less resource intensive alternatives coming out of China.

“There's such a real threat in such a nascent industry that what is being built is not what is going to be needed in the long run,” said Burke. “The challenge remains that residential rate payers in the long run are being asked to finance the risk, and obviously that benefits the utilities, and it really benefits some of the most wealthy companies in the world, But it sure is risky for the folks who are living right next door.”

The Alliance for Affordable Energy expects the commission to make a decision on the plants this fall.

From 404 Media via this RSS feed

Welcome back to the Abstract!

This week, we’re moving in next to anacondas, so watch your back and lock the henhouse. Then, parenthood tips from wild baboons, the “cognitive debt” of ChatGPT, a spaceflight symphony, and a bizarre galaxy that is finally coming into view.

When your neighbor is an anaconda

Anacondas are one of the most spectacular animals in South America, inspiring countless myths and legends. But these iconic boas, which can grow to lengths of 30 feet, are also a pest to local populations in the Amazon basin, where they prey on livestock.

To better understand these nuanced perceptions of anacondas, researchers interviewed more than 200 residents of communities in the várzea regions of the lower Amazon River about their experiences with the animals. The resulting study is packed with amazing stories and insights about the snakes, which are widely reviled as thieves and feared for their predatory prowess.

“Fear of the anaconda (identified in 44.5% of the reports) is related to the belief that it is a treacherous and sly animal,” said co-authors led by Beatriz Nunes Cosendey of the Mamirauá Sustainable Development Reserve and Juarez Carlos Brito Pezzuti of the Federal University of Pará.

“The interviewees convey that the anaconda is a silent creature that arrives without making any noise, causing them to feel uneasy and always vigilant during fishing…with the fear of having their canoe flooded in case of an attack,” the team added. “Some dwellers even reported being more afraid of an anaconda than of a crocodile because the latter warns when it is about to attack.”

One of the Amazonian riverine communities where the research was conducted. Image: Beatriz Cosendey.

But while anacondas are eerily stealthy, they also have their derpy moments. The snakes often break into chicken coops to feast on the poultry, but then get trapped because their engorged bodies are too big to escape through the same gaps they used to enter.

“Dwellers expressed frustration at having to invest time and money in raising chickens, and then lose part of their flock overnight,” the team said. “One interviewee even mentioned retrieving a chicken from inside an anaconda’s belly, as it had just been swallowed and was still fresh.”

Overall, the new study presents a captivating portrait of anaconda-human relations, and concludes that “the anaconda has lost its traditional role in folklore as a spiritual and mythological entity, now being perceived in a pragmatic way, primarily as an obstacle to free-range poultry farming.”

Monkeying around with Dad

Coming off of Father’s Day, here is a story about the positive role that dads can play for their daughters—for baboons, as well as humans. A team tracked the lifespans of 216 wild female baboons in Amboseli, Kenya, and found that subjects who received more paternal care had significantly better outcomes than their peers.

Male baboon with infant in the Amboseli ecosystem, Kenya. Image: Elizabeth Archie, professor at Notre Dame.

“We found that juvenile female baboons who had stronger paternal relationships, or who resided longer with their fathers, led adult lives that were 2–4 years longer than females with weak or short paternal relationships,” said researchers led by David Jansen of the Midwest Center of Excellence for Vector-Borne Disease. “Because survival predicts female fitness, fathers and their daughters may experience selection to engage socially and stay close in daughters’ early lives.”

This all reminds me of that old episode of The Simpsons where Lisa calls Homer a baboon. While Homer was clearly hurt, it turns out that baboons might not be the worst animal-based insult for a daughter to throw at her dad.

A case for staying ChatGPT-Free

ChatGPT may hinder creativity and learning skills in students who use it to write essays, relative to those who didn’t, according to an exhaustive new preprint study posted on arXiv. This research has yet not been peer-reviewed, and has a relatively small sample size of 54 subjects, but it still contributes to rising concerns about the cognitive toll of AI assistants.

Researchers led by Nataliya Kosmya of the Massachusetts Institute of Technology divided the subjects — all between 18 and 39 years old — into three groups wrote SAT essays using OpenAI’s ChatGPT (LLM group), Google’s search engine, or with no assistance (dubbed “Brain-only”).

“As demonstrated over the course of 4 months, the LLM group's participants performed worse than their counterparts in the Brain-only group at all levels: neural, linguistic, scoring,” the team said. “The LLM group also fell behind in their ability to quote from the essays they wrote just minutes prior.”

When I asked ChatGPT for its thoughts on the study, it commented that “these results are both interesting and plausible, though they should be interpreted cautiously given the early stage of the research and its limitations.” It later suggested that “cognitive offloading is not always bad.”

This study is a bop

Even scientists can’t resist evocative language now and then—we’re all only human. Case in point: A new study likens the history of Asia’s space industry to “a musical concert” and then really runs with the metaphor.

“The region comprises a diverse patchwork of nations, each contributing different instruments to the regional space development orchestra,” said researchers led by Maximilien Berthet of the University of Tokyo. “Its history consists of three successive movements” starting with “the US and former USSR setting the tone for the global space exploration symphony” and culminating with modern Asian spaceflight as “a fast crescendo in multiple areas of the region driven in part by private initiative.”

Talk about a space opera. The rest of the study provides a comprehensive review of Asian space history, but I cannot wait for the musical adaptation.

Peekaboo! I galax-see you

In 2001, astronomer Bärbel Koribalski spotted a tiny galaxy peeking out from behind a bright foreground star that had obscured it for decades, earning it the nickname the “Peekaboo Galaxy.” Situated about 22 million light-years from the Milky Way, this strange galaxy is extremely young and metal-poor, resembling the universe’s earliest galaxies.

The Peekaboo galaxy to the right of the star TYC 7215-199-1. Image: NASA, ESA, Igor Karachentsev (SAO RAS); Image Processing: Alyssa Pagan (STScI)

A new study confirms Peekaboo as “the lowest-metallicity dwarf in the Local Volume,” a group of roughly 500 galaxies within 36 million light-years of Earth.

“This makes the Peekaboo dwarf one of the most intriguing galaxies in the Local Volume,” said co-authors Alexei Kniazev of the South African Astronomical Observatory and Simon Pustilnik of the Special Astrophysical Observatory of the Russian Academy of the Sciences. “It deserves intensive, multi-method study and is expected to significantly advance our understanding of the early universe’s first building blocks.”

Thanks for reading! See you next week.

Update: The original headline for this piece was "Is ChatGPT Rotting Our Brains? New Study Suggests It Does." We've updated the headline to "ChatGPT May Create 'Cognitive Debt,' New Study Finds" to match the terminology used by the researchers.

From 404 Media via this RSS feed

This is Behind the Blog, where we share our behind-the-scenes thoughts about how a few of our top stories of the week came together. This week, we discuss Deadheads and doxxing sites.

SAM: Anyone reading the site closely this week likely noticed a new name entering the chat. We’re thrilled to welcome Rosie Thomas to the gang for the summer as an editorial intern!

Rosie was previously a software engineer in the personal finance space. Currently halfway through her master’s degree in journalism, Rosie is interested in social movements, how people change their behaviors in the face of new technologies, and “the infinite factors that influence sentiment and opinions,” in her words. In her program, she’s expanding her skills in investigations, audio production, and field recording. She published her first blog with us on day two, a really interesting (and in 404 style, informatively disturbing) breakdown of a new report that found tens of thousands of camera feeds exposed to the dark web. We’re so excited to see what she does with us this summer!

From 404 Media via this RSS feed

This article was produced in collaboration with Court Watch, an independent outlet that unearths overlooked court records. Subscribe to them here.

The FBI has accused a Texas man, James Wesley Burger, of planning an Islamic State-style terrorist attack on a Christian music festival and talking about it on Roblox. The feds caught Burger after another Roblox user overheard his conversations about martyrdom and murder and tipped them off. The feds said that when they searched Burger’s phone they found a list of searches that included “ginger isis member” and “are suicide attacks haram in islam.”

According to charging documents, a Roblox player contacted federal authorities after seeing another player called “Crazz3pain” talking about killing people. Screenshots from the server and included in the charging documents show Roblox avatars with beards dressed in Keffiyehs talking about dealing a “greivoius [sic] wound upon followers of the cross.”

“The witness observed the user of Crazz3pain state they were willing, as reported by the Witness, to ‘kill Shia Musilms at their mosque,” court records said. “Crazz3pain and another Roblox user[…]continued to make violent statements so the witness left the game.”

The witness stayed off of Roblox for two days and when they returned they saw Crazz3pain say something else that worried them, according to the court filing. “The Witness observed Crazz3pain tell Roblox User 1 to check their message on Discord,” the charging document said. “Roblox User 1 replied on Roblox to Crazz3pain, they should delete the photograph of firearms within the unknown Discord chat, ‘in case it was flagged as suspicious…the firearms should be kept hidden.”

According to the witness, Crazz3pain kept talking about their desire to commit “martyrdom” at a Christian event and that he wanted to “bring humiliation to worshippers of the cross.” The Witness allegedly asked Crazz3pain if the attack would happen at a church service and Crazz3pain told them it would happen at a concert.

Someone asked Crazz3pain when it would happen. “‘It will be months…Shawwal…April,’” Crazz3pain said. Shawwal is the month after Ramadan in the Islamic calendar. The conversations the witness shared with the FBI happened on January 21 and 23, 2025.

Roblox gave authorities Crazz3pain’s email address, name, physical address, and IP address and it all pointed back to James Wesley Burger. The FBI searched Burger’s home on February 28 and discovered that someone in his family had put on a keylogger on the laptop he used to play Roblox and that they’d captured a lot of what he’d been typing while playing the game. They turned over the records to the feds.

“The safety of our community is among our highest priorities. In this case, we moved swiftly to assist law enforcement’s investigation before any real-world harm could occur and investigated and took action in accordance with our policies. We have a robust set of proactive and preventative safety measures designed to help swiftly detect and remove content that violates our policies," a spokesperson for Roblox told 404 Media. "Our Community Standards explicitly prohibit any content or behavior that depicts, supports, glorifies, or promotes terrorist or extremist organizations in any way. We have dedicated teams focused on proactively identifying and swiftly removing such content, as well as supporting requests from and providing assistance to law enforcement. We also work closely with other platforms and in close collaboration with safety organizations to keep content that violates our policies off our platform, and will continue to diligently enforce our policies.”

Burger’s plan to kill Christians was allegedly captured by the keylogger. “I’ve come to conclude it will befall the 12 of Shawwal aa/And it will be a music festival /Attracting bounties of Christians s/In’shaa’allah we will attain martyrdom /And deal a grevious [sic] wound upon the followers of the Cross /Pray for me and enjoin yourself to martyrdom,” he allegedly typed in Roblox, according to court records.

The FBI then interviewed Burger in his living room and he admitted he used the Crazz3pain account to play Roblox. The feds asked him about his alleged plan to kill Christians at a concert. Burger said it was, at the time, “mostly a heightened emotional response,” according to the court records.

Burger also said that the details “became exaggerated” but that the goal “hasn’t shifted a bit,” according to the court records. He said he wanted to “[G]et the hell out of the U.S.” And if he can’t, “then, martyrdom or bust.”

He said that his intention with the attack “is something that is meant to or will cause terror,” according to the charging document. When the FBI agent asked if he was a terrorist, Burger said, “I mean, yeah, yeah. By, by the sense and … by my very own definition, yes, I guess, you know, I would be a terrorist.”

When authorities searched his iPhone, they discovered two notes on the phone that described how to avoid leaving behind DNA and fingerprints at a crime scene. A third note appeared to be a note explaining the attack, meant to be read after it occured.

The list of previous searches on his iPhone included “Which month is april in islam,” “Festivals happening near me,” “are suicide attacks haram in islam,” “ginger isis member,” “lone wolf terrorists isis,” and “can tou kill a woman who foesnt[sic] wear hijab.”

Burger has been charged with making violent threats online and may spend time in a federal prison if convicted. This is not the first time something like this has happened on Roblox. The popular children’s game has been a popular spot for extremist behavior, including Nazis and religious terrorists, for years now. Last year, the DOJ accused a Syrian man living in Albanian of using Roblox to coordinate a group of American teenagers to disrupt public city council Zoom meetings.

From 404 Media via this RSS feed

🌘Subscribe to 404 Media to get The Abstract, our newsletter about the most exciting and mind-boggling science news and studies of the week.

Scientists have directly confirmed the location of the universe's “missing” matter for the first time, reports a study published on Monday in Nature Astronomy.

The idea that the universe must contain normal, or “baryonic,” matter that we can’t seem to find goes back to the birth of modern cosmological models. Now, a team has revealed that about 76 percent of all baryons—the ordinary particles that make up planets and stars—exist as gas hidden in the dark expanses between galaxies, known as the intergalactic medium. Fast radio bursts (FRBs), transient signals with elusive origins, illuminated the missing baryons, according to the researchers. As a bonus, they also identified the most distant FRB ever recorded, at 9.1 billion light years away, in the study.

“Measuring the ‘missing baryons’ with Fast Radio Bursts has been a major long-sought milestone for radio astronomers,” said Liam Connor, an astronomer at the Center for Astrophysics | Harvard & Smithsonian who led the study, in an email. “Until recently, we didn’t have a large-enough sample of bursts to make strong statements about where this ordinary matter was hiding.”

Under the leadership of Caltech professor Vikram Ravi, the researchers constructed the DSA-110 radio telescope—an array of over 100 dishes in the California desert—to achieve this longstanding milestone. “We built up the largest and most distant collection of localized FRBs (meaning we know their exact host galaxy and distance),” Connor explained. “This data sample, plus new algorithms, allowed us to finally make a complete baryon pie chart. There are no longer any missing wedges.”

Baryons are the building blocks of the familiar matter that makes up our bodies, stars, and galaxies, in contrast to dark matter, a mysterious substance that accounts for the vast majority of the universe’s mass. Cosmological models predict that there is much more baryonic matter than we can see in stars and galaxies, which has spurred astronomers into a decades-long search for the “missing baryons” in space.

Scientists have long assumed that most of this missing matter exists in the form of ionized gas in the IGM, but FRBs have opened a new window into these dark reaches, which can be difficult to explore with conventional observatories.

“FRBs complement and improve on past methods by their sensitivity to all the ionized gas in the Universe,” Connor said. “Past methods, which were highly informative but somewhat incomplete, could only measure hot gas near galaxies or clusters of galaxies. There was no probe that could measure the lion’s share of ordinary matter in the Universe, which it turns out is in the intergalactic medium.”

Since the first FRB was detected in 2007, thousands of similar events have been discovered, though astronomers still aren't sure what causes them. Characterized by extremely energetic radio waves that last for mere milliseconds, the bursts typically originate millions or billions of light years from our galaxy. Some repeat, and some do not. Scientists think these pyrotechnic events are fueled by massive compact objects, like neutron stars, but their exact nature and origins remain unclear.

Connor and his colleagues studied a sample of 60 FRB observations that spanned from about 12 million light years away from Earth all the way to a new record holder for distance: FRB 20230521B, located 9.1 billion light years away. With the help of these cosmic searchlights, the team was able to make a new precise measurement of the density of baryonic matter across the cosmic web, which is a network of large-scale structures that spans the universe. The results matched up with cosmological predictions that most of the missing baryons would be blown out into the IGM by “feedback” generated within galaxies. About 15 percent is present in structures that surround galaxies, called halos, and a small remainder makes up stars and other celestial bodies.

“It really felt like I was going in blind without a strong prior either way,” Connor said. “If all of the missing baryons were hiding in galaxy halos and the IGM were gas-poor, that would be surprising in its own way. If, as we discovered, the baryons had mostly been blown into the space between galaxies, that would also be remarkable because that would require strong astrophysical feedback and violent processes during galaxy formation.”

“Now, looking back on the result, it’s kind of satisfying that our data agrees with modern cosmological simulations with strong ‘feedback’ and agrees with the early Universe values of the total abundance of normal matter,” he continued. “Sometimes it’s nice to have some concordance.”

The new measurement might alleviate the so-called sigma-8 tension, which is a discrepancy between the overall “clumpiness” of matter in the universe when measured using the cosmic microwave background, which is the oldest light in the cosmos, compared with using modern maps of galaxies and clusters.

“One explanation for this disagreement is that our standard model of cosmology is broken, and we need exotic new physics,” Connor said. “Another explanation is that today’s Universe appears smooth because the baryons have been sloshed around by feedback.”

“Our FRB measurement suggests the baryon cosmic web is relatively smooth, homogenized by astrophysical processes in galaxies (feedback),” he continued. “This would explain the S8 tension without exotic new physics. If that’s the case, then I think the broader lesson is that we really need to pin down these pesky baryons, which have previously been very difficult to measure directly.”

To that end, Connor is optimistic that more answers to these cosmic riddles are coming down the pike.

“The future is looking bright for the field of FRB cosmology,” he said. “We are in the process of building enormous radio telescope arrays that could find tens of thousands of localized FRBs each year,” including the upcoming DSA-2000.

“My colleagues and I think of our work as baby steps towards the bigger goal of fully mapping the ordinary, baryonic matter throughout the whole Universe,” he concluded.

🌘Subscribe to 404 Media to get The Abstract, our newsletter about the most exciting and mind-boggling science news and studies of the week.

From 404 Media via this RSS feed

A report from a cybersecurity company last week found that over 40,000 unsecured cameras—including CCTV and security cameras on public transportation, in hospitals, on internet-connected bird feeders and on ATMs—are exposed online worldwide.

Cybersecurity risk intelligence company BitSight was able to access and download content from thousands of internet-connected systems, including domestic and commercial webcams, baby monitors, office security, and pet cams. They also found content from these cameras on locations on the dark web where people share and sell access to their live feeds. “The most concerning examples found were cameras in hospitals or clinics monitoring patients, posing a significant privacy risk due to the highly sensitive nature of the footage,” said João Cruz, Principal Security Research Scientist for the team that produced the report.

The company wrote in a press release that it “doesn’t take elite hacking to access these cameras; in most cases, a regular web browser and a curious mind are all it takes, meaning that 40,000 figure is probably just the tip of the iceberg.”

Depending on the type of login protocol that the cameras were using, the researchers were able to access footage or individual real-time screenshots. Against a background of increasing surveillance by law enforcement and ICE, there is clear potential for abuse of unknowingly open cameras.

“Knowing the real number is practically impossible due to the insanely high number of camera brands and models existent in the market,” said Cruz, “each of them with different ways to check if it’s exposed and if it’s possible to get access to the live footage.”

The report outlines more obvious risks, from tracking the behavioral patterns and real-time status of when people are in their homes in order to plan a burglary, to “shoulder surfing,” or stealing data by observing someone logging in to a computer in offices. The report also found cameras in stores, gyms, laundromats, and construction sites, meaning that exposed cameras are monitoring people in their daily lives. The geographic data provided by the camera’s IP addresses, combined with commercially available facial-recognition systems, could prove dangerous for individuals working in or using those businesses.

You can find out if your camera has been exposed using a site like Shodan.io, a search engine which scans for devices connected to the internet, or by trying to access your camera from a device logged in to a different network. Users should also check the documentation provided by the manufacturer, rather than just plugging in a camera right away, to minimize vulnerabilities, and make sure that they set their own password on any IoT-connected device.

This is because many brands use default logins for their products, and these logins are easily findable online. The BitSight report didn’t try to hack into these kinds of cameras, or try to brute-force any passwords, but, “if we did so, we firmly believe that the number would be higher,” said Cruz. Older camera systems with deprecated and unmaintained software are more susceptible to being hacked in this way; one somewhat brighter spot is that these “digital ghost ships” seem to be decreasing in number as the oldest and least secure among them are replaced or fail completely.

Unsecured cameras attract hackers and malicious actors, and the risks can go beyond the embarrassing, personal, or even individual. In March this year, the hacking group Akira successfully compromised an organisation using an unsecured webcam, after a first attack attempt was effectively prevented by cybersecurity protocols. In 2024, the Ukrainian government asked citizens to turn off all broadcasting cameras, after Russian agents hacked into webcams at a condo association and a car park. They altered the direction of the cameras to point toward nearby infrastructure and used the footage in planning strikes. Ukraine blocked the operation of 10,000 internet-connected digital security cameras in order to prevent further information leaks, and a May 2025 report from the Joint Cybersecurity Advisory described continued attacks from Russian espionage units on private and municipal cameras to track materials entering Ukraine.

From 404 Media via this RSS feed

As Israel and Iran trade blows in a quickly escalating conflict that risks engulfing the rest of the region as well as a more direct confrontation between Iran and the U.S., social media is being flooded with AI-generated media that claims to show the devastation, but is fake.

The fake videos and images show how generative AI has already become a staple of modern conflict. On one end, AI-generated content of unknown origin is filling the void created by state-sanctioned media blackouts with misinformation, and on the other end, the leaders of these countries are sharing AI-generated slop to spread the oldest forms of xenophobia and propaganda.

If you want to follow a war as it’s happening, it’s easier than ever. Telegram channels post live streams of bombing raids as they happen and much of the footage trickles up to X, TikTok, and other social media platforms. There’s more footage of conflict than there’s ever been, but a lot of it is fake.

A few days ago, Iranian news outlets reported that Iran’s military had shot down three F-35s. Israel denied it happened. As the claim spread so did supposed images of the downed jet. In one, a massive version of the jet smolders on the ground next to a town. The cockpit dwarfs the nearby buildings and tiny people mill around the downed jet like Lilliputians surrounding Gulliver.

It’s a fake, an obvious one, but thousands of people shared it online. Another image of the supposedly downed jet showed it crashed in a field somewhere in the middle of the night. Its wings were gone and its afterburner still glowed hot. This was also a fake.

Image via X.com.

Image via X.com. Image via X.com.

Image via X.com.

AI slop is not the sole domain of anonymous amateur and professional propagandists. The leaders of both Iran and Israel are doing it too. The Supreme Leader of Iran is posting AI-generated missile launches on his X account, a match for similar grotesques on the account of Israel’s Minister of Defense.

New tools like Google’s Veo 3 make AI-generated videos more realistic than ever. Iranian news outlet Tehran Times shared a video to X that it said captured “the moment an Iranian missile hit a building in Bat Yam, southern Tel Aviv.” The video was fake. In another that appeared to come from a TV news spot, a massive missile moved down a long concrete hallway. It’s also clearly AI-generated, and still shows the watermark in the bottom right corner for Veo.

#BREAKING Doomsday in Tel Aviv pic.twitter.com/5CDSUDcTY0

— Tehran Times (@TehranTimes79) June 14, 2025

After Iran launched a strike on Israel, Tehran Times shared footage of what it claimed was “Doomsday in Tel Aviv.” A drone shot rotated through scenes of destroyed buildings and piles of rubble. Like the other videos, it was an AI generated fake that appeared on both a Telegram account and TikTok channel named “3amelyonn.”

In Arabic, 3amelyonn’s TikTok channel calls itself “Artificial Intelligence Resistance” but has no such label on Telegram. It’s been posting on Telegram since 2023 and its first TikTok video appeared in April of 2025, of an AI-generated tour through Lebanon, showing its various cities as smoking ruins. It’s full of the quivering lines and other hallucinations typical of early AI video.

But 3amelyonn’s videos a month later are more convincing. A video posted on June 5, labeled as Ben Gurion Airport, shows bombed out buildings and destroyed airplanes. It’s been viewed more than 2 million times. The video of a destroyed Tel Aviv, the one that made it on to Tehran Times, has been viewed more than 11 million times and was posted on May 27, weeks before the current conflict.

Hany Farid, a UC Berkeley professor and founder of GetReal, a synthetic media detection company, has been collecting these fake videos and debunking them.

“In just the last 12 hours, we at GetReal have been seeing a slew of fake videos surrounding the recent conflict between Israel and Iran. We have been able to link each of these visually compelling videos to Veo 3,” he said in a post on LinkedIn. “It is no surprise that as generative-AI tools continue to improve in photo-realism, they are being misused to spread misinformation and sow confusion.”

The spread of AI-generated media about this conflict appears to be particularly bad because both Iran and Israel are asking their citizens not to share media of destruction, which may help the other side with its targeting for future attacks. On Saturday, for example, the Israel Defense Force asked people not to “publish and share the location or documentation of strikes. The enemy follows these documentations in order to improve its targeting abilities. Be responsible—do not share locations on the web!” Users on social media then fill this vacuum with AI-generated media.

“The casualty in this AI war [is] the truth,” Farid told 404 Media. “By muddying the waters with AI slop, any side can now claim that any other videos showing, for example, a successful strike or human rights violations are fake. Finding the truth at times of conflict has always been difficult, and now in the age of AI and social media, it is even more difficult.”

“We're committed to developing AI responsibly and we have clear policies to protect users from harm and governing the use of our AI tools,” a Google spokesperson told 404 Media. “Any content generated with Google AI has a SynthID watermark embedded and we add a visible watermark to Veo videos too.”

Farid and his team used SynthID to identify the fake videos “alongside other forensic techniques that we have developed over at GetReal,” he said. But checking a video for a SynthID watermark, which is visually imperceptible, requires someone to take the time to download the video and upload it to a separate website. Casual social media scrollers are not taking the time to verify a video they’re seeing by sending it to the SynthID website.

One distinguishing feature of 3amelyonn and others’ videos of viral AI slop about the conflict is that the destruction is confined to buildings. There are no humans and no blood in 3amelyonn’s aerial shots of destruction, which are more likely to get blocked both by AI image and video generators as well as the social media platforms where these creations are shared. If a human does appear, they’re as observers like in the F-35 picture or milling soldiers like the tunnel video. Seeing a soldier in active combat or a wounded person is rare.

There’s no shortage of real, horrifying footage from Gaza and other conflicts around the world. AI war spam, however, is almost always bloodless. A year ago, the AI-generated image “All Eyes on Raffah” garnered tens of millions of views. It was created by a Facebook group with the goal of “Making AI prosper.”

From 404 Media via this RSS feed

This week we start with Joseph’s article about the U.S’s major airlines selling customers’ flight information to Customs and Border Protection and then telling the agency to not reveal where the data came from. After the break, Emanuel tells us how AI scraping bots are breaking open libraries, archives, and museums. In the subscribers-only section, Jason explains the casual surveillance relationship between ICE and local cops, according to emails he got.

Listen to the weekly podcast on Apple Podcasts,Spotify, or YouTube. Become a paid subscriber for access to this episode's bonus content and to power our journalism. If you become a paid subscriber, check your inbox for an email from our podcast host Transistor for a link to the subscribers-only version! You can also add that subscribers feed to your podcast app of choice and never miss an episode that way. The email should also contain the subscribers-only unlisted YouTube link for the extended video version too. It will also be in the show notes in your podcast player.

Airlines Don't Want You to Know They Sold Your Flight Data to DHSAI Scraping Bots Are Breaking Open Libraries, Archives, and MuseumsEmails Reveal the Casual Surveillance Alliance Between ICE and Local Police

From 404 Media via this RSS feed

📄This article was primarily reported using public records requests. We are making it available to all readers as a public service. FOIA reporting can be expensive, please consider subscribing to 404 Media to support this work. Or send us a one time donation via our tip jar here.

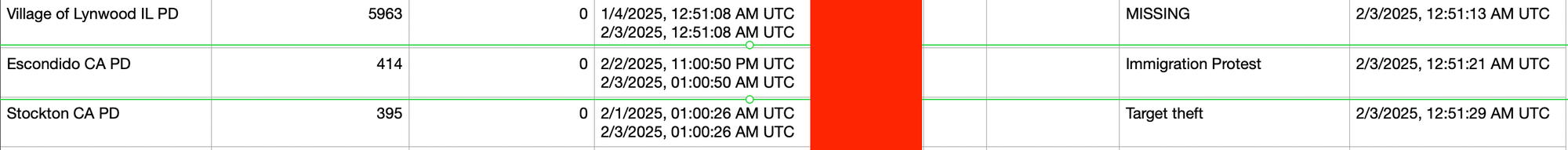

A California police department searched AI-enabled, automatic license plate reader (ALPR) cameras in relation to an “immigration protest,” according to internal police data obtained by 404 Media. The data also shows that police departments and sheriff offices around the country have repeatedly tapped into the cameras inside California, made by a company called Flock, on behalf of Immigration and Customs Enforcement (ICE), digitally reaching into the sanctuary state in a data sharing practice that experts say is illegal.

Flock allows participating agencies to search not only cameras in their jurisdiction or state, but nationwide, meaning that local police that may work directly with ICE on immigration enforcement are able to search cameras inside California or other states. But this data sharing is only possible because California agencies have opted-in to sharing it with agencies in other states, making them legally responsible for the data sharing.

The news raises questions about whether California agencies are enforcing the law on their own data sharing practices, threatens to undermine the state’s perception as a sanctuary state, and highlights the sort of surveillance or investigative tools law enforcement may deploy at immigration related protests. Over the weekend, millions of people attended No Kings protests across the U.S. 404 Media’s findings come after we revealed police were searching cameras in Illinois on behalf of ICE, and then Cal Matters found local law enforcement agencies in California were searching cameras for ICE too.

“I think especially in this current political climate where the government is taking extreme measures to crack down on civil liberties, especially immigrants’ rights being one of those, you can easily see how ALPRs, which is an extremely invasive technology, could be weaponized against that community,” Jennifer Pinsof, a senior staff attorney at activist organization the Electronic Frontier Foundation (EFF), told 404 Media.

💡Do you know anything else about Flock? I would love to hear from you. Using a non-work device, you can message me securely on Signal at joseph.404 or send me an email at joseph@404media.co.

404 Media obtained the data through a public records request with Redlands Police Department in California. The collection of spreadsheets comprises the agency’s “Network Audit” of what other agencies have searched using its Flock systems since June 1, 2024 and the reason the agency provided for doing so.