Again ?!!

Hacker News

Posts from the RSS Feed of HackerNews.

The feed sometimes contains ads and posts that have been removed by the mod team at HN.

it's like dracula; dying over and over again.

but its buried now, so we are all safe.

What do you mean?

It's been slowing down for years and is now about as dead as a 90 year old in a coma. Or it just was confirmed dead and is therefore about as dead as a 90 year old with no heartbeat.

We haven't gotten advancements anywhere near the pace of previous generations. None of the small improvements are from a significant increase in capacity, but from a messy unstable variety of things that often at other costs just push the limits of the limit, which we've clearly reached. I personally have to undervolt mine and just hope it doesn't crash, and I don't use the AI frames because it looks bad. It's a mess.

The hardware is strained and the picture is fucking stained.

It's not Moore's law that will describe any increased performance from here. It's other things. Personally I want to see significantly larger consumer GPUs as we admit we cannot scale down to fit more anymore.

It probably can't just be ATX from here.

I'd like to see significant improvements in the build in general while we scale up. These expensive parts shouldn't be connected by cheap shitty connectors that are so tough to seat and unseat that it jeopardizes the parts.

Also, no more floating GPUs, formalize a standard solution to support the far end properly, even if it scales up to the size of a fucking longboard and the weight of a car tire. Have a plan and include the parts to fully support it.

This period of custom loose-sitting GPU support pillars has been pathetic. I recently moved and cut a toilet paper roll in half and taped it to the bottom of the case to prevent any bump from dislodging the GPU during transport, as is a known (and accepted) problem. I fucking hated doing that.

Just put the GPU in it's own separate ATX case, why bother with PCI-X slot?

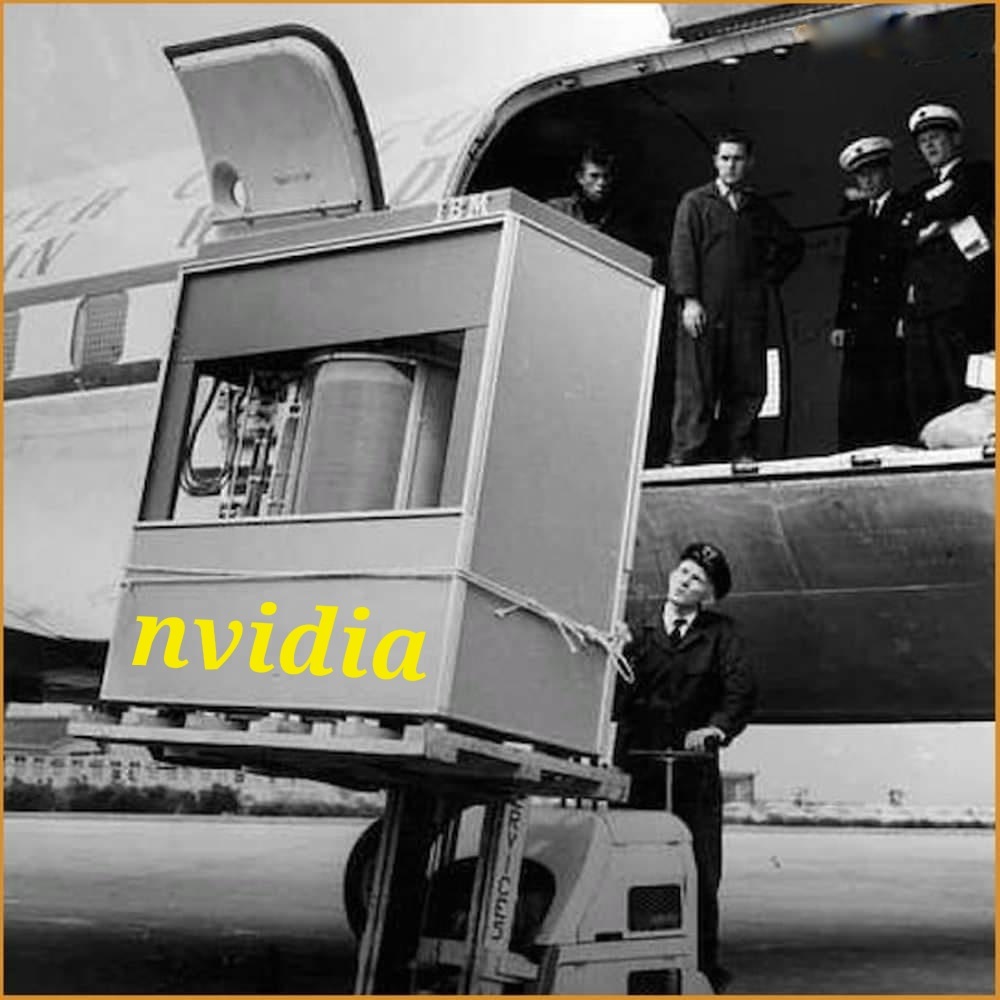

I want a GPU delivered in its own housing on a plane like those old IBM 5mb hard drives from the 60's

You're joking but we have to scale up, GPUs and CPUs will get bigger and bigger if they gonna be more and more performant. There is no cheating the scale where electrons will just jump across threads. It's impressive where we've gotten and how fast we got there, but from now it's either scale up or stay stuck at a few square centimeters of dense silicon.

We saw this with Blackwell Ultra. Ian Buck, VP of Accelerated Computing business unit at Nvidia, told us in an interview they actually nerfed the chip's double precision (FP64) tensor core performance in exchange for 50% more 4-bit FLOPS.

Whether this is a sign that FP64 is on its way out at Nvidia remains to be seen, but if you really care about double-precision grunt, AMD's GPUs and APUs probably should be at the top of your list anyway.

So focused on LLMs that they're hindering performance of general-purpose compute, going to be real great when people realise LLMs don't really do anything and are left with piles of useless hardware.