I'm working on a project to back up my family photos from TrueNas to Blu-Ray disks. I have other, more traditional backups based on restic and zfs send/receive, but I don't like the fact that I could delete every copy using only the mouse and keyboard from my main PC. I want something that can't be ransomwared and that I can't screw up once created.

The dataset is currently about 2TB, and we're adding about 200GB per year. It's a lot of disks, but manageably so. I've purchased good quality 50GB blank disks and a burner, as well as a nice box and some silica gel packs to keep them cool, dark, dry, and generally protected. I'll be making one big initial backup, and then I'll run incremental backups ~monthly to capture new photos and edits to existing ones, at which time I'll also spot-check a disk or two for read errors using DVDisaster. I'm hoping to get 10 years out of this arrangement, though longer is of course better.

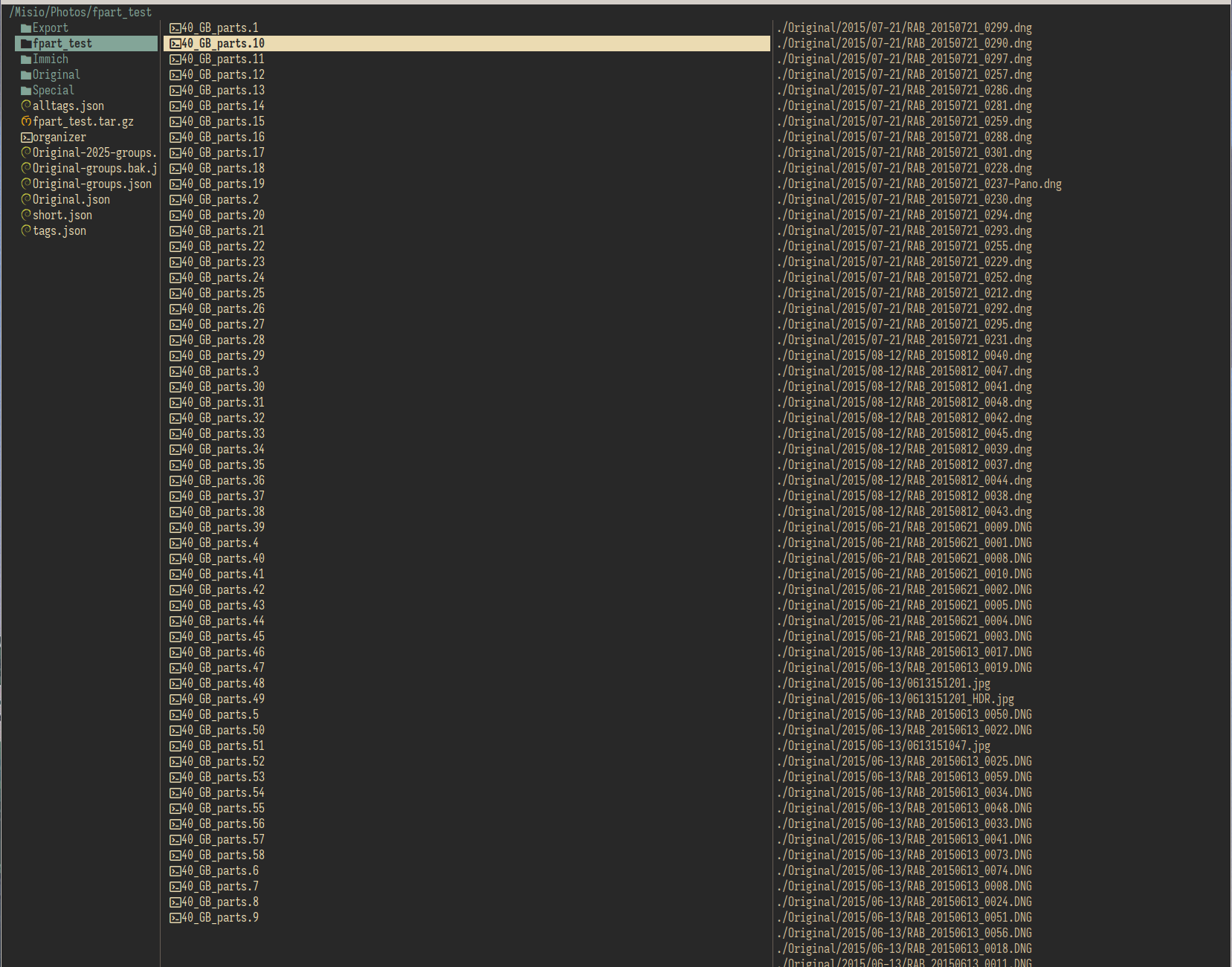

I've got most of the pieces worked out, but the last big question I need to answer is which software I will actually use to create the archive files. I've narrowed it down to two options: dar and bog-standard gnu tar. Both can create multipart, incremental backups, which is the core capability I need.

Dar Advantages (that I care about):

- This is exactly what it's designed to do.

- It can detect and tolerate data corruption. (I'll be adding ECC data to the disks using DVDisaster, but defense in depth is nice.)

- More robust file change detection, it appears to be hash based?

- It allows me to create a database I can use to locate and restore individual files without searching through many disks.

Dar disadvantages:

- It appears to be a pretty obscure, generally inactive project. The documentation looks straight out of the early 2000s and it doesn't have https. I worry it will go offline, or I'll run into some weird bug that ruins the show.

- Doesn't detect renames. Will back up a whole new copy. (Problematic if I get to reorganizing)

- I can't find a maintained GUI project for it, and my wife ain't about to learn a CLI. Would be nice if I'm not the only person in the world who could get photos off of these disks.

Tar Advantages (that I care about):

- battle-tested, reliable, not going anywhere

- It's already installed on every single linux & mac PC , and it's trivial to put on a windows pc.

- Correctly detects renames, does not create new copies.

- There are maintained GUIs available; non-nerds may be able to access

Tar disadvantages:

- I don't see an easy way to locate individual files, beyond grepping through

snarmetadata files (that aren't really meant for that). - The file change detection logic makes me nervous - it appears to be based on modification time and inode numbers. The photos are in a ZFS dataset on truenas, mounted on my local machine via SMB. I don't even know what an inode number is, how can I be sure that they won't change somehow? Am I stuck with this exact NAS setup until I'm ready to make a whole new base backup? This many blu-rays aren't cheap and burning them will take awhile, I don't want to do it unnecessarily.

I'm genuinely conflicted, but I'm leaning towards dar. Does anyone else have any experience with this sort of thing? Is there another option I'm missing? Any input is greatly appreciated!

Try it out. Order coffee with it